Nov. 19, 2021

‘Touchable spoken words’ bring the fantastic to life

Imagine a fantastic world where you can not only see the words that someone is speaking as they speak them, but interact with them as well; it’s almost like magic. Aside from the obvious fun and wonder that would come with such an experience, the possibilities for accessible learning and enriched conversations are endless.

With a pitch centred on this idea, Dr. Ryo Suzuki, PhD, an assistant professor in the Department of Computer Science in UCalgary's Faculty of Science, and a team of graduate students, recently won in the Snapchat-hosted Snap Creative Challenge, which invited submissions about the Future of Co-Located Social AR (augmented reality).

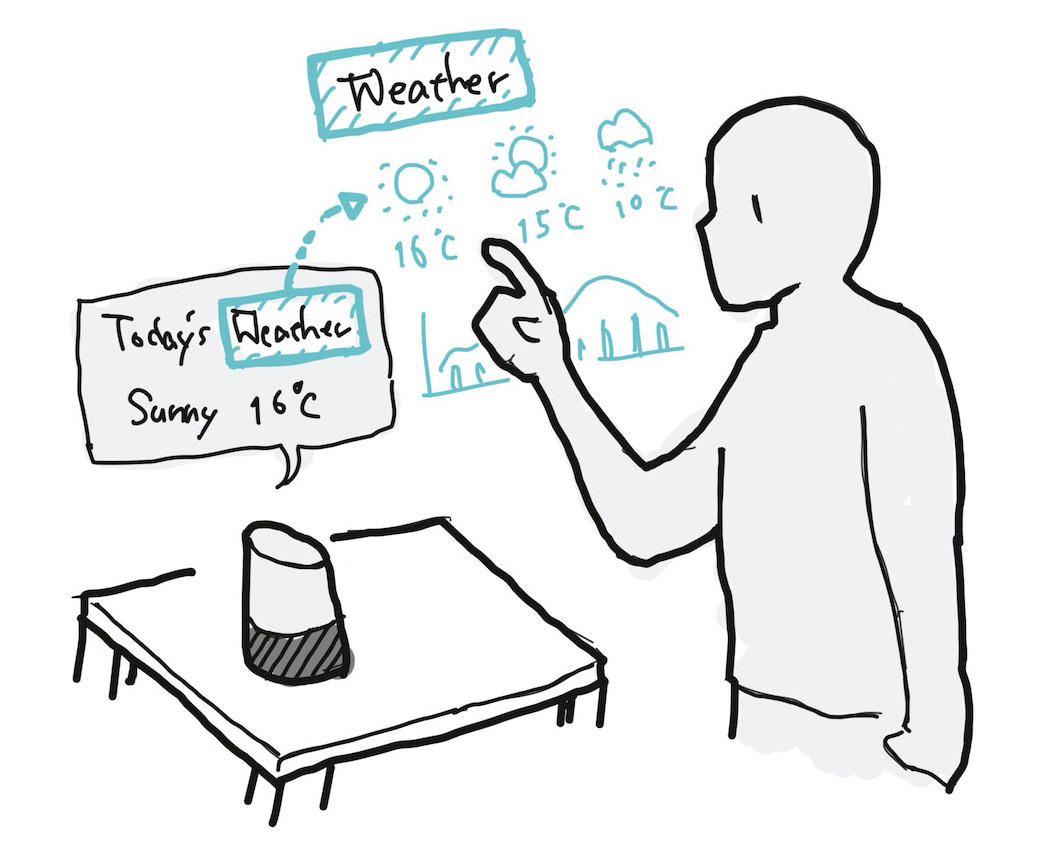

Their project, “Touchable Spoken Words: Augmenting In-Person Verbal Communication,” explores a new interaction technique for transcribed spoken language in augmented reality, with the vision to allow the user not only to “see” the transcribed words in augmented reality, but also “interact” with these words in real-time.

“Since the prehistoric era, humans have communicated through speech and voice, and we still do. However, this communication channel has not changed over thousands of years,” says Suzuki, whose research focuses on human-computer interaction.

“Our team wanted to explore how we could augment this most traditional human-to-human communication with the power of AR. In our submission to the Snap Creative Challenge, and in ongoing research, our team’s goal is to transform the captured spoken words into an interactive and dynamic medium that the user can touch, manipulate, and interact with in the real world.”

We’re exploring a future where people can interact with words.

Current technology for digitally enhanced conversations always includes a screen. In its present iteration, Suzuki’s proposal would require wearing an AR headset; however, he is aiming to eventually take screens out of the equation to make these types of interactions more natural.

In brainstorming ideas with graduate students Shivesh Jadon, Adnan Karim, and Neil Chulpongsatorn for the Snap Creative Challenge, the team sought to explore different ways to enhance social interactions such as collaboration, conversations, or presentations.

“Let’s say I was talking to a friend about a camping trip I’d gone on. [Using our proposed technology] when I say the name of the campground, I would be able to use AR to pull up photos, 3D images, maps, or other information right in front of us,” Suzuki explains.

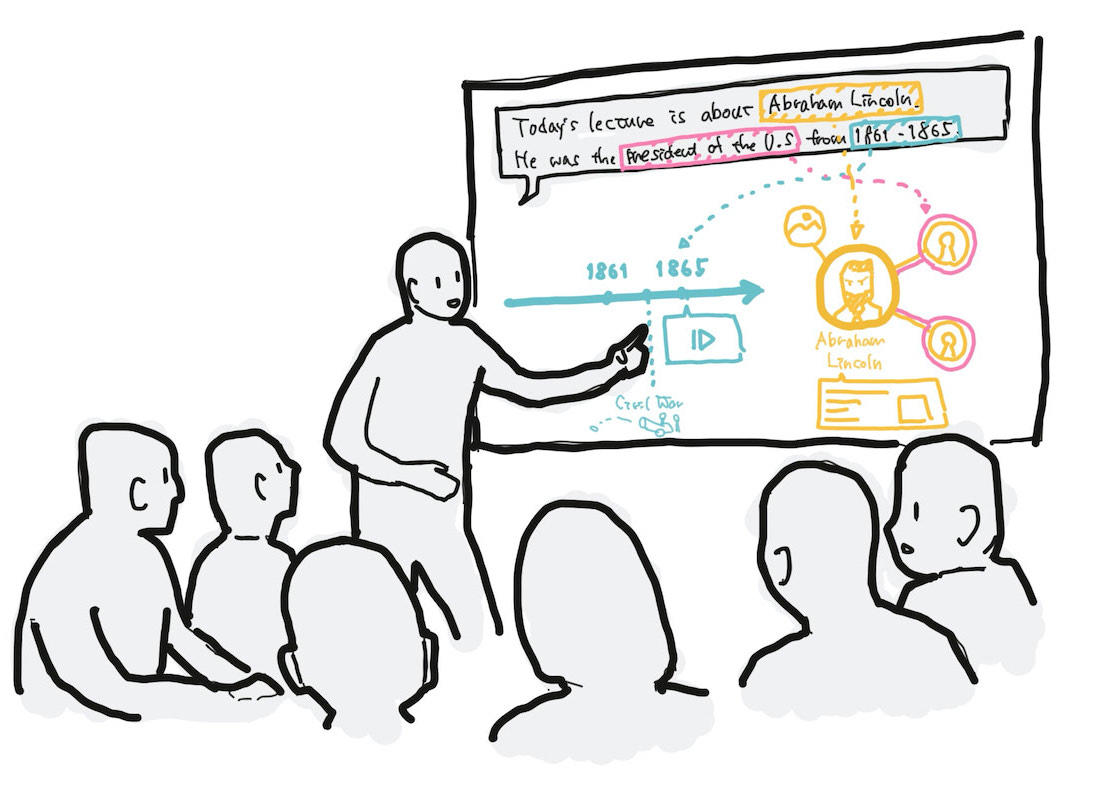

The technology could also have applications in teaching and learning. Suzuki offers the following example: “If a history teacher was talking about Abraham Lincoln, they could extract some basic information about him from Wikipedia, Google, Google Images, and other sources.

“We can also probably do this kind of thing in the real world through conversation, because your natural conversation provides a pretty natural keyword search,” he says.

Karim says the end goal is to visualize speech. “That’s what AR provides for us,” he says. “Imagine that, while you’re talking to your friends, the words are popping up in front of you and you can click a certain keyword that would be relevant to a certain visualization. It’s pretty much visualizing your thoughts as you speak. We can apply that to a lot of use cases.”

The team is currently prototyping with mobile devices, but are exploring ways to deploy these kind of devices to more hands-free interaction.

Karim points to a recent wearable technology collaboration between Facebook and Ray Ban — Ray Ban Stories smart glasses — as a device that could use their technology. “The product is a really stylish pair of sunglasses, but they have AR technology built in,” he says. “A lot of other companies are probably working on similar products. It’s really on the rise.”

While Suzuki says that some technical design components — such as keyword extraction and tracking hand motion — will be challenging, work is moving ahead swiftly.

The team aims to have a working prototype ready for testing in February 2022.

Shivesh Jadon, Ryo Suzuki, and Adnan Karim

Snap Creative Challenge is an invitation to universities to reimagine augmented reality storytelling. Snap Research serves as an innovation engine for Snap Inc., a camera company that believes that reinventing the camera represents our greatest opportunity to improve the way people live and communicate. Snap's products empower people to express themselves, live in the moment, learn about the world, and have fun together.